For successful communication, interlocutors have to establish co-orientation to the ongoing task and to features of their environment. While in psychology-driven approaches the ability to coordinate attention with one another – in this context termed as “joint attention” – has been predominantly investigated by taking the example of “gaze-following” (Scaife & Bruner 1975), less is known about the contribution of multimodal resources and the fine-grained interactional procedures participants use in order to establish co-orientation both in stable and unstable interactional environments.

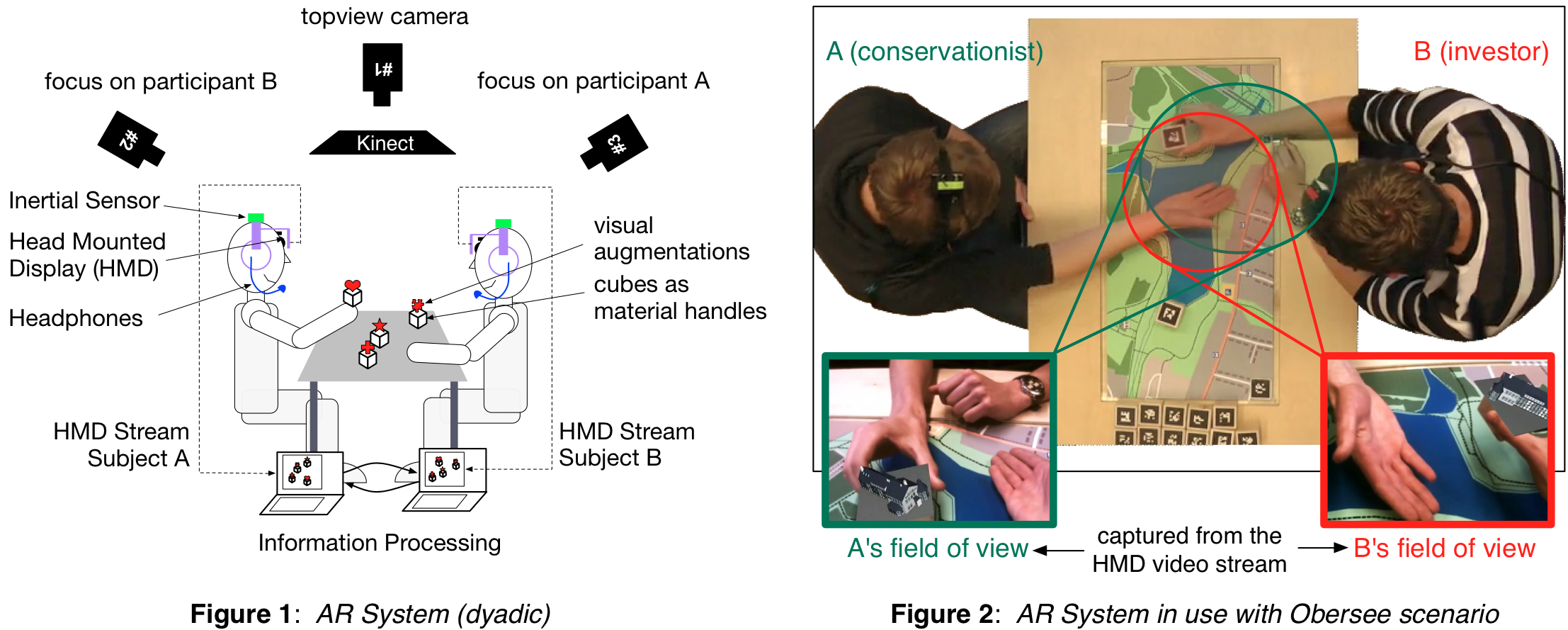

In order to investigate the participants’ orienting procedures apart from gaze-coordination, we propose to investigate the phenomenon under the conditions of Augmented Reality (AR). By using our AR-based Interception and Manipulation System (ARbInI) for co-presently interacting participants (Dierker et al. (2009); Neumann (2011)) (cf. Fig.1) we are able to remove, manipulate or add communicatively relevant multimodal information in real-time. Taking the system as a tool for linguistic research (Pitsch et al. 2013), we built on the psycholinguistic tradition of experimenting with communicational parameters (e.g. limiting visual access to the co-participants’ head, hands or material resources /displaying different information /creating unstable interactional environments, etc.).

In our semi-experimental setup, pairs of users were seated across a table and asked to jointly plan a fictional recreation area around the lake “Obersee” (cf. Fig. 2.) To increase engagement, they were asked to take potentially conflicting roles (investor vs. conservationist). Besides the given map of the Obersee, we provided 18 mediation objects, including both profit-oriented (e.g. a hotel) and preservation-oriented structures (e.g. a water protection sign), which could be negotiated and placed on the map. This “planning concepts” consisted of augmented objects (virtual representations) sitting on top of wooden blocks as material ‘handles’.

Against this background, we use the AR system as an instrument to investigate interactional coordination and alignment with regard to the participants’ practices for establishing co-orientation. By combining qualitative (Conversation Analysis) and quantitative (Data Mining) approaches, we address the following questions:

- What impact does “mutual monitoring” (which is limited using AR glasses) have on the participants’ procedures for establishing co-orientation and for coordinating their actions?

- To which set of cues (and how) can the multimodal complexity of human interaction be reduced so that procedures for establishing co-orientation could be detected by technical systems?

- Can communicational resources that are relevant in face-to-face interaction be shifted to and displayed in other modalities?

- What effect do specifically-introduced disturbances have on the users’ procedures for establishing co-orientation? In what way do they attempt to repair or compensate for the effects induced?

In summary, our project results (i) in the development of a novel research tool pairing a high degree of experimental control with simultaneous recording of the users’ perceptions, and (ii) contributes scientific results concerning the multimodal organization of co-orientation, obtained from both conversation analysis and data mining.