The project C1 has investigated spatial alignment and complementary alignment of gestures between a human and an artificial agent who interact in a shared space. The mental representation of this interaction space facilitates the coordination of parallel actions. In the artificial intelligence part the consequences of spatial perspective taking have been explored in a virtual setup where both partners jointly move and manipulate virtual objects. The cognitive robotics part has focused on perception and gesture control issues in a physical setup where a human and a robotic receptionist operate on the same map between them.

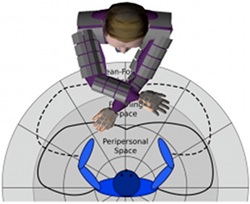

When two persons are closely facing each other, the interaction space is formed by the overlapping of the two persons’ peripersonal spaces (the space immediately surrounding the body). We assume that the mental representation of the interaction space needs to be aligned in both partners in order to ensure a smooth cooperation between them. A principal goal of the project has been to enhance artificial agents by an awareness of interaction space. Therefore, spatial representations for the peripersonal and interpersonal action space have been developed and methods for dynamic alignment of interpersonal space representations devised thereof. Prior, significant progress could be achieved in two robotic scenarios towards establishing and maintaining interaction spaces (using a model for proxemics for a mobile and a humanoid torso robot), body coding for peripersonal spaces (virtual agent) and perception of gestures (physical agent), and an integrated robotic receptionist scenario that have provided a basis for our work in the project.

The project will proceed towards assuming less pre-knowledge about the partner and setup, and the modelling of more dynamic changes in interaction spaces. This will be studied along two main threads that complement each other.

Artificial Intelligence

In the Artificial Intelligence part we adopt the Interactive Alignment Model by assuming that alignment occurs when interlocutors share the same mental representation of interaction space. Therefore interlocutors who share the same spatial representation of their shared environment are more successful in cooperation tasks than interlocutors who have different spatial representations of their shared space. To develop a model of interaction space which comprises alignment of spatial representations, we will (i) investigate spatial perspective taking for artificial agents as an unconscious mechanism comprising embodiment effects, (ii) develop methods to inter the partner’s spatial perspective with changing body orientation and position (iii) investigate and develop a representation of interpersonal action space and (iv) model adequate behaviour strategies based on concepts of proxemics to support successful and natural interaction with artificial agents.

Project members researching on this part: Ipke Wachsmuth, Nhung Nguyen

Cognitive Robotics

In the Cognitive Robotics part the loop between the perception of gestures and the production of gestures has been closed in an interactive scenario. In particular, the control of gesture generation takes past and current activity of the partner into account. On the basis of social presence, the robot decies (i) if the performance of a gesture is possible, (ii) if the dynamics of the gesture needs to be changed (slow down, wait), or (iii) if the gesture should be performed differently (in kinematics and dynamics). These skills are enabled and mediated by an appropriate representation of the interaction space.

In order to keep the mutual representation of the interaction space aligned, social signalling is not only incorporated into pointing gestures but also on a larger scale: Based on an adapted model of proxemics, interpersonal distances and configurations are interpreted as social signals and fed into an overall concept of spatial awareness. The improvements have been incorporated into the demonstration setup of the robotic receptionist, which have been shown increase the usability and acceptance of the demonstration system.

Project members researching on this part: Sven Wachsmuth, Patrick Holthaus