Base: (../..)

Project A1 has investigated alignment phenomena which occur on the basis of an internal model of partners’ mental states, namely, partners’ cognitive and affective states. Concretely, we have considered the following research questions: - Modeling partners’ affective states for emotional alignment based on empathy - Modeling partners’ cognitive states for the alignment of attention foci based on joint attention - Investigating how emotions and gaze modulate partners’ visual attention during situated sentence comprehension

Artificial Intelligence (AI)

The AI part has investigated cooperative communication (with shared goals) in human-agent settings by implementing and extending operational partner models that allow for emotional alignment through empathy and for the alignment of attention foci through joint attention. In this regard, we came up with the following results:

A computational model of empathy

- Generates an empathic emotion by means of facial mimicry and role-taking

- Provides different degrees of empathy based on empathy modulation factors such as liking and familiarity

- Applied in a conversational agent scenario: EMMA empathizes with MAX to different degrees depending on her value of liking toward MAX – see demo:

- Applied in a spatial interaction task scenario in cooperation with CRC project C1: MAX’s degrees of empathy modulate his spatial helping action. MAX’s value of liking increases when the partner helps him – see demos:

An operational model for joint attention

- Provides high level sensory information on gaze behavior (see first video)

- Produces natural timing of gaze behavior

- Participants prefer gaze behaviors with timing aligned to their own

- Uses new real-time techniques for eyetracking in 3D (see second video)

Psycholinguistics (Psy. Ling.)

State of the art at project start: The state of the art at the outset of the A1 project was that a range of linguistic and non-linguistic information sources had been found to influence language comprehension and visual attention. By contrast, we knew little about how a speaker’s facial expression (e.g., smiling vs. looking sad; gaze) primes a listener’s (expectations during) semantic and syntactic processes in comprehension. Such non-linguistic priming had also not been clearly characterized in the interactive alignment model.

Goals: Against this background, the goals of the experimental part of project A1 were to examine

- the contribution of facial-emotion priming to comprehenders’ incremental semantic interpretation;

- the priming of thematic role assignment and syntactic structuring through emotional facial expressions;

- emotion-based priming of comprehension as a function of speaker characteristics (e.g., perceived social competence of virtual agents vs. humans) and a comparison of valenced prime cues with other cues such as speaker gaze;

- emotion-based priming depending on listener characteristics (specifically, age).

The results can be summarized as follows:

-

R1: We obtained empirical evidence for rapid priming of incremental semantic interpretation by facial emotional expressions. The results are documented in 3 Master’s theses (DeCot, 2011, Glados, 2012, Münster, 2012), a 6-page conference paper (Carminati & Knoeferle, 2013), and a journal article (Carminati & Knoeferle, 2013)

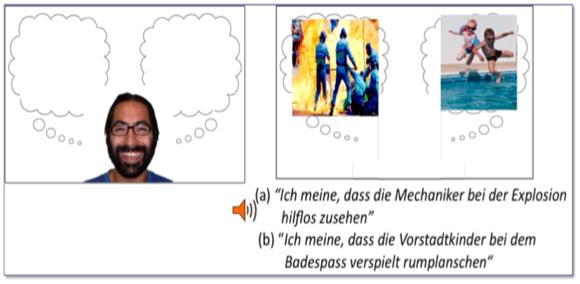

Example picture for the studies in R1. The picture illustrates on the left the prime face (here with positive valence), and to the right it illustrates the target display showing a photograph of a negative and a positive event. The corresponding sentence was auditorily presented together with the target display and is included in written form below.

- R2: By contrast, the speaker’s emotional facial expressions did not modulate syntactic structuring and thematic role assignment.

-

R3: In collaboration with the computational part of A1, we conducted an empirical study to evaluate empathy in the virtual agent EMMA (Boukricha, Wachsmuth, Carminati, & Knoeferle, 2013). We also followed up the null results from R2 by examining how another partner cue (speaker gaze) affects syntactic structuring and thematic role assignment. Unlike the emotional facial primes, the gaze of a speaker rapidly affected thematic role assignment in SVO and OVS sentences, with differences for semantic-referential processes compared with thematic role assignment (Knoeferle & Kreysa, 2012).

Example picture for speaker gaze effects (R3):

-

R4: We conducted two experiments with older adults and compared priming effects in older adults with those for younger adults (as evidenced by results from WP1; see Carminati & Knoeferle, 2013; Carminati & Knoeferle, In Press; DeCot, 2011, Glados, 2012, Münster, 2012). In brief, while young adults exhibited reliable emotion-based priming effects with negative stimuli, older adults showed these priming effects for positive stimuli only.

A figure from Carminati and Knoeferle (PLoS One, 2013, p. 10) illustrating the age-related positivity and negativity biases in first fixation duration. When you click on the graph you will see an example trial (with speaker face and spoken sentence / target display, see also R1 above)